One aspect of LiveCode 7.0 that I keep bringing up in my blog posts is the distinction between textual data and binary data. Although LiveCode does not implement data types for scripts, it does use them internally. Being aware of how the engine treats these types is important for getting the maximum speed out of your scripts.

The four basic types that the engine operates on are Text, BinaryData, Numbers and Arrays – there are a number of sub-types for each of these but the majority of them are not important here. Arrays are very different to the other three (for example, you can’t treat a number as an array or vice-versa) so I’ll ignore them in this blog post.

Bird, Plane or Binary?

First thing first: what exactly are each of the types?

- Number: integer or floating-point (decimal) value

- Binary: a sequence of bytes

- Text: human-readable text

The distinction between Binary and Text doesn’t seem all that clear; after all, isn’t text ultimately just a sequence of bytes stored somewhere?

The important difference is how you, the script writer, intend to use the data: if you think terms like "char" or "word" are meaningful for your data, it is probably Text. On the other hand, Binary is just a bunch of bytes to which the engine assigns no particular meaning. Another useful rule-of-thumb is that anything a human will interact with is text while anything a computer will use is binary (notable exceptions to this are HTML and XML, which are text-based formats).

Promotions

LiveCode will happily hide issues of binary vs text vs number from you. It is, however, very useful to know how exactly it does this because getting it wrong can result in unexpected results.

The types used by the engine can be thought of as a hierarchy, with the least meaningful types at the bottom and most meaningful at the top. This order, from top-to-bottom is:

- Number

- Text

- Binary

Inside the engine, types get moved up and down this hierarchy as necessary. A type can always be moved downwards to a less-specific type but cannot always be moved upwards. For example, the string "hello" isn’t exactly meaningful as a number.

One potentially surprising consequence of this is the code "put byte 1 of 42" will return ‘4’ rather than the byte with value 42. This happens because the conversion from Number to Binary passes through Text, where the number is converted to the string "42", of which the first byte is "4".

Types in a Typeless Language

Long-time LiveCoders amongst you will no doubt now be thinking that LiveCode is a typeless language and wondering how this information can be used. The key to it is simply being consistent in how you use any piece of data.

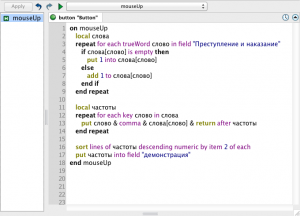

Implicit type-conversion within the engine can be slow, especially for large chunks of data, so you don’t want to do things like this:

answer byte "1" to -1 of "¡Hola señor!"

That short line of code contains a number of un-necessary type conversions. The obvious one is specifying the byte using a string instead of a number. It also contains a conversion of Text to Binary – the "byte" chunk expression indicates you want to treat the input as binary data instead of text. Finally, the "answer" command expects Text, so it converts it back again.

Although the example is contrived, you do need to be careful with chunk expressions. Using "byte" means that you want the data to be Binary while any other chunk type is used for Text.

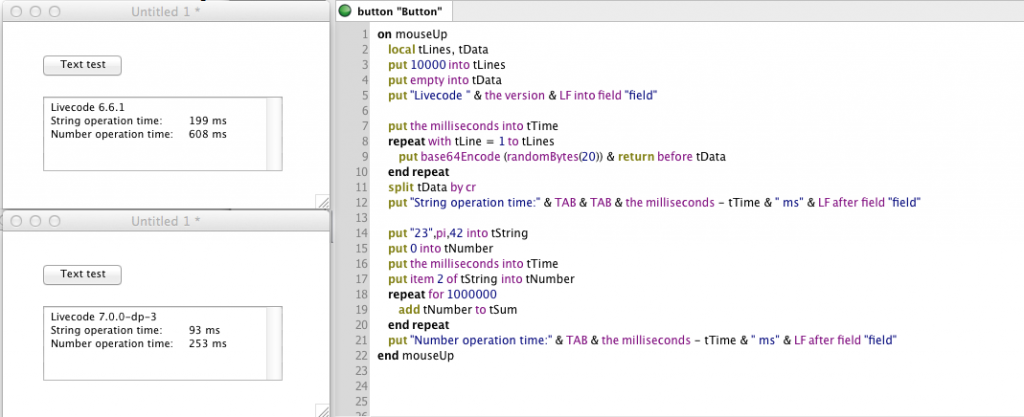

Text is Slow

It isn’t just type conversions that are slow; treating data as the wrong type can also be slow. In particular, comparison operations are slower for Text than for Binary or Number. To compare text, the caseSensitive and formSensitive properties have to be taken into consideration; case conversion and text normalisation operations mean that the Text operations are slower than the equivalent for Binary.

On the other hand, Text is far more flexible that Binary. Operations on Binary are limited to the following (anything outside this will result in conversion to Text):

- "is" and "is not" (must be Binary on both sides of the operation)

- "byte" chunk expressions

- "byteOffset" function

- concatenation ("put after", "put before" and "&" but not "&&")

- I/O from/to files and sockets opened as binary

- "binfile:" URLs

- functions explicitly dealing with binary data (e.g. compress, base64encode)

- properties marked as being binary data

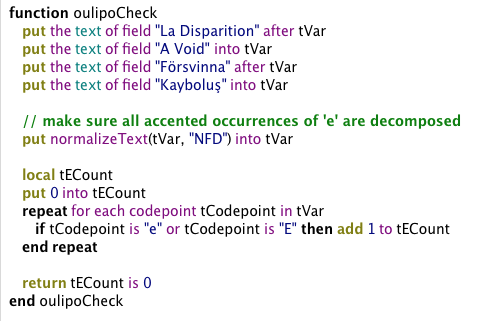

Text Encodings and Strict-Mode

Those of you who have been paying attention to my previous blog posts (if you exist!) will have heard me mention that to convert between Text and Binary you need to use textEncode and textDecode. With these functions, you specify an encoding. But when the engine does it automatically, what encoding does it use?

The answer is the "native" encoding of the OS on which LiveCode is running. This means "CP1252" on Windows, "MacRoman" on OSX and iOS and "ISO-8859-1" on Linux and Android. All of these platforms fully support Unicode these days but these were the traditional encodings on these platforms before the Unicode standard came about. LiveCode keeps these encodings for backwards-compatibility.

The reason LiveCode continues to automatically convert between these encodings is because the engine previously did not treat Text and Binary any differently (one impact of this is having to set caseSensitive to true before doing comparisons with binary data). In some situations, you might want to turn this legacy behaviour off.

To aid this, we are planning to (at some point in the future) implement a "strict mode" feature similar to the existing Variable Checking option – in this mode, there will be no auto-conversion between Binary and Text and any attempt to do so will throw an error (similar to using a non-numeric string where a number is expected). Like variable checking, it will be optional but using it should help find bugs and potential speed improvements.

read more

Recent Comments